Machine Translation Evaluation Tool

Machine Translation is a useful tool in a world that needs quick translations of large volumes of documents. The CrossLang Machine Translation Evaluation tool allows users to evaluate machine translation in terms of quality and productivity.

MT Evaluation Tasks

Productivity Task

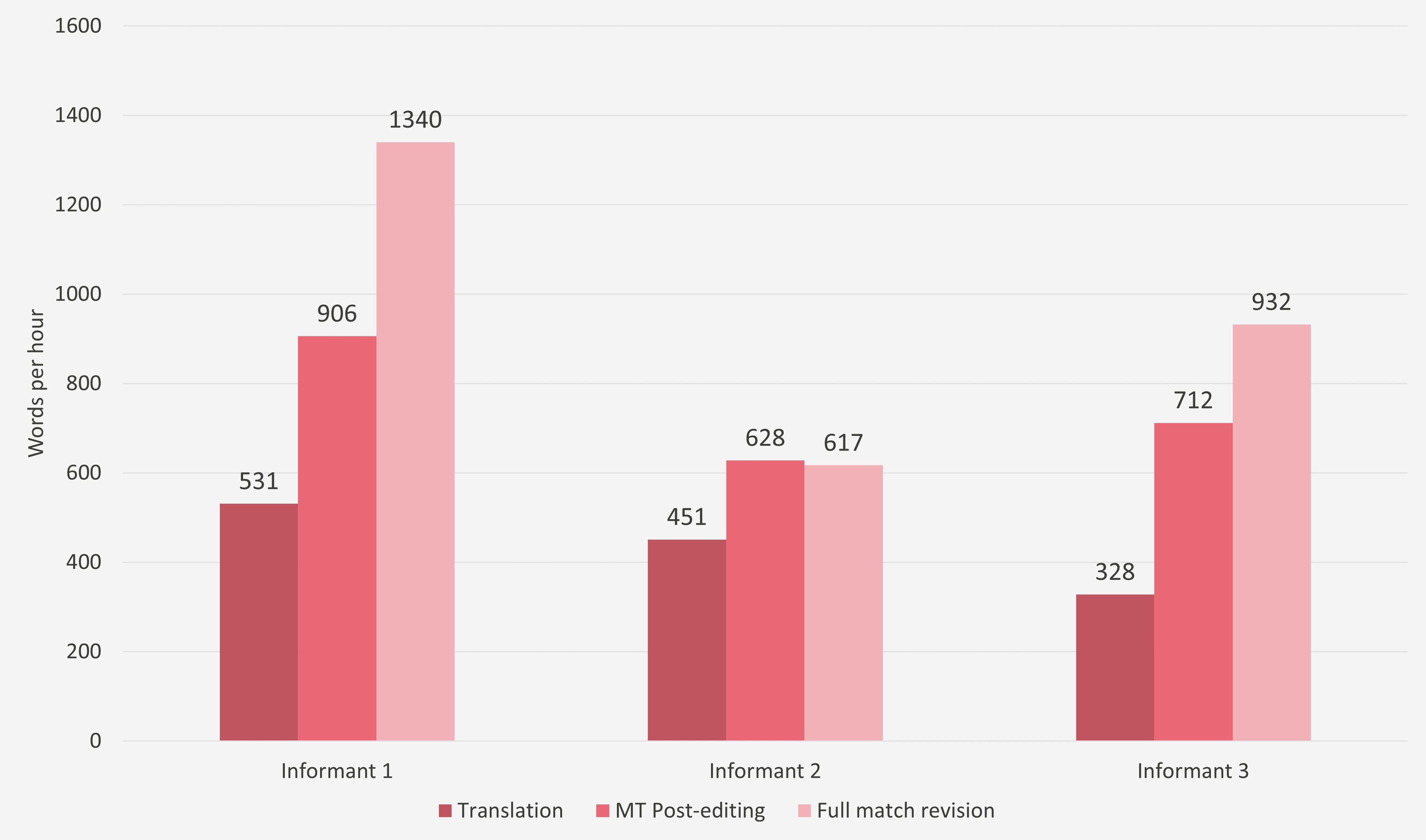

In this type of evaluation, an assessment is carried out of a translation task. Based on a test document, an estimate is generated: to what extent would productivity increase using the tested method? For example, two tasks could be run simultaneously: one where linguists translate a text from scratch, and another in which they apply machine translation and post-editing. The MT Evaluation tool measures the amount of time linguists take performing these tasks. As we are using relevant test documentation (i.e. the same language pair in a similar domain or field as our customer), CrossLang is able to offer customers a clear view of the preferred method in their use case.

Quality Task

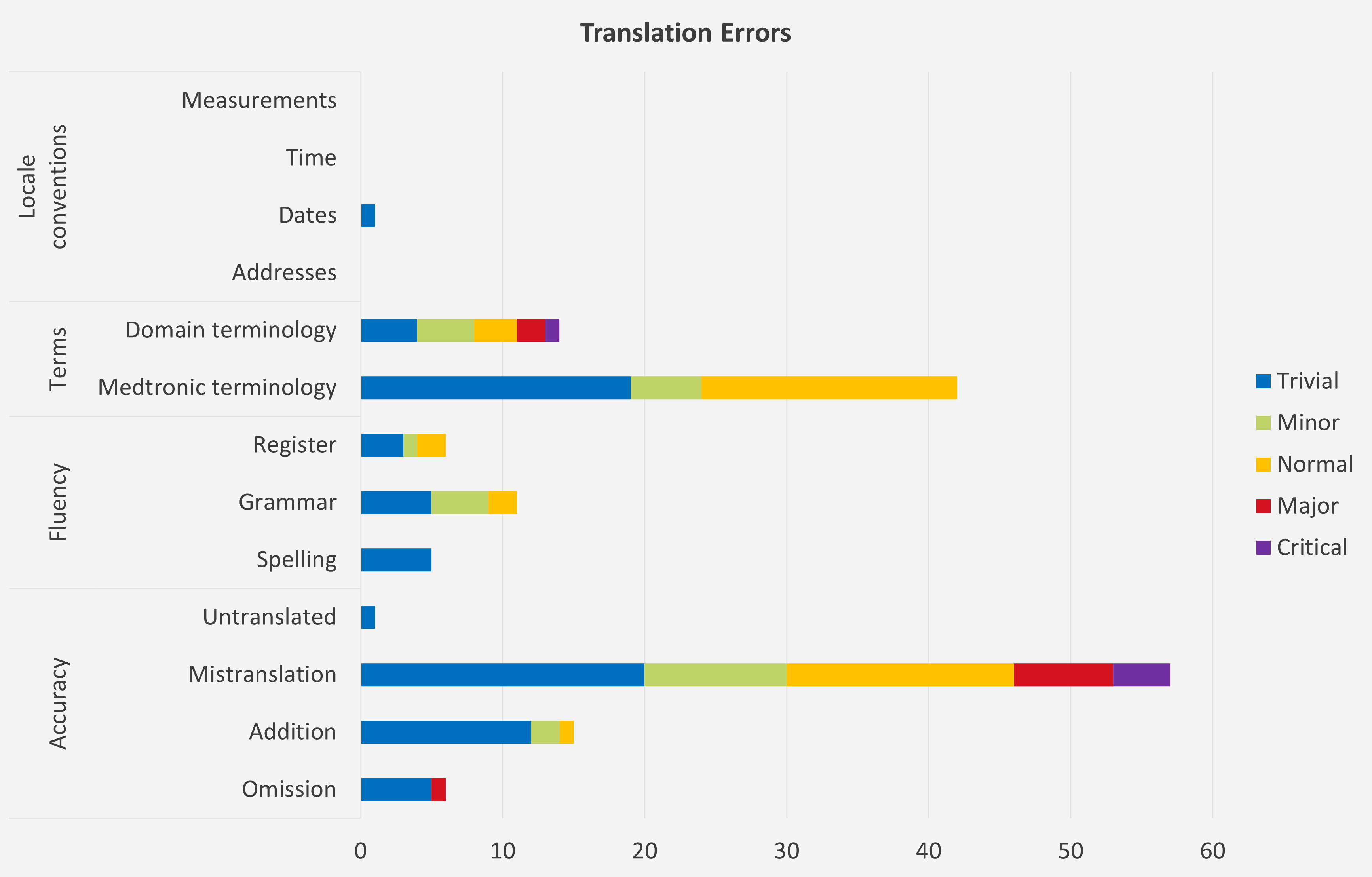

The quality task allows CrossLang to improve the quality of a custom machine translation system. If, for example, a system scores poorly in terms of grammar, extra attention can be paid to that area in the next training of the system. It also allows customers to pick and choose which MT engine suits their needs best. By evaluating results in terms of accuracy, fluency etc., a clear overview of the quality of a specific engine can be generated.

Post-editing Task

Comparison Task

A comparison task allows CrossLang to rank different translation outputs. Many test combinations are possible: comparing a human translation to machine translation, several machine translations via different providers or even an old machine translation system versus an updated version. Several options are available: the quality of outputs can be rated and ranked, or the evaluator can indicate which of the possible options they prefer. That way, the different outputs can be compared in a statistical and highly detailed way, enabling the customer to have an overview of all the available options and their benefits, thus allowing them to optimise their process.

Human Machine Translation Evaluation

More and more companies wish to apply a machine translation and/or post-editing step in their translation workflow. But where to start? Which machine translation provider suits a certain company or context best?

With a professional human evaluation, the answers to all of these questions are provided. Read more about human evaluations in our blog.