GDPR

In 2018, the European Union set a new standard for data security: the General Data Protection Regulation (GDPR). The GDPR lays out principles for sharing and re-using people’s personal, often sensitive, information. Companies who want to store or share this data have to comply with GDPR regulations. Innovations in language technology are now making it possible to safely process sensitive data. The solution: anonymising the information.

Anonymisation

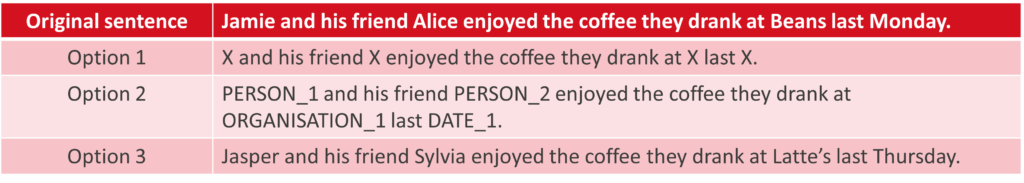

The process of anonymisation detects elements that can be used to identify a person (names, dates, addresses, etc.) and masks or removes them. These elements are called named entities (NEs). They must be masked or removed in such a way that the resulting text cannot be associated with the original individual or organisation. For small amounts of data, manual anonymisation might be a viable option. A person can adapt a text to such an extent that all sensitive, private information is masked or removed. However, this becomes infeasible when dealing with large volumes of text. In that case, anonymisation should be automated. As we will explain below, masking an NE consists of blackening it (e.g. using the label X) or using a more informative label such as PERSON. Replacing an NE with something else, such as a label, is also referred to as “pseudonymisation”.

Anonymisation techniques

Automated anonymisation involves two steps. The first step consists of detecting the NEs: to identify exactly which words or phrases should be anonymised. This is achieved using a system trained from manually annotated data. Manual annotation consists of reading through a certain amount of text, indicating which words or phrases constitute an NE, and adding information about the type (name, address, etc.). Based on these annotations, a model can be trained that “learns” not only which words or phrases constitute an NE, but also what type of NE they are.

The second step focuses on different strategies that can be applied to replace the identified NE. You can see a couple of examples below where personal data is either replaced by a label or by a similar word (for example replacing a person’s name with another name).

The choice of which replacement strategy to apply depends on the sensitivity of the data and on the purpose of the anonymised text. For example, option 3 returns a readable text, which makes life easier for a human reader. But in case the anonymised text will be fed to software for machine learning (for example a tool for producing summaries or for translating text), the use of a label may be more suitable.

Quality of anonymisation

Thanks to new technology, the quality of the output of machine learning models for anonymisation is improving. However, it is not (yet) possible to guarantee complete accuracy. It remains possible that an anonymisation tool either classifies too many words or phrases as an NE, or misses out a couple of them. In any case, the risk of identifying which people were described in the original text remains low, especially when the third replacement strategy is used. A hacker trying to steal information will not be able to identify which NEs are the result of anonymisation and which are not.

Anonymisation models can be built using general data, but even better results can be achieved when they are trained and used for specific domains. For example, based on medical data, a new model can be built that specialises in anonymising medical texts.

Purpose of anonymisation tools

The possibilities for anonymisation tools are endless. The techniques explained above can be applied in several sectors and industries. For example, anonymised data can be used to train artificial intelligence models, such as machine translation systems. Or large amounts of sensitive data can become accessible for research purposes. The translation industry can also benefit from tools for anonymisation, by processing translation memories.

Research

In collaboration with various partners, CrossLang has conducted in-depth research in the field of anonymisation. For instance, a study was carried out in the framework of the ELRC Action (European Language Resource Coordination) of the European Commission.

The future has a lot in store for this field: as models’ results are improving, it will become increasingly safe to share and process large batches of data. Which, in the field of machine learning, is an exciting prospect!