A couple of years ago, neural machine translation stepped into the limelight. Using deep learning, the quality of machine translation has increased substantially and will keep increasing in the future.

Yet, how do we measure actual ‘improvement’ in the quality of machine translation outputs? How can we ensure that the output from a machine translation system is sufficiently useful for commercial purposes?

Can I evaluate machine translation quality myself?

The first and perhaps most obvious way of measuring the performance of a machine translation system is by looking at its output and deciding for yourself whether it ‘looks good enough’. You can even compare the output of a couple of different machine translation providers and decide which one you like best.

However, this method lacks scientific backing, as you can look at or compare only a limited number of sentences or paragraphs. Even then, forming a sound opinion proves difficult: which characteristics of the output do you find important? And what if you do not understand the target language? Or, even more importantly: what if someone else has a different opinion? How do you substantiate your preference? Clearly, a more objective approach is called for.

A more reliable Human Evaluation of Machine Translation Quality

First of all, the opinion of a single person does not suffice as proof of quality when it comes to machine translation output. Any human evaluation should always involve more than one person. And not just any person for that matter: preferably a linguist or translator who is knowledgeable about the subject of the text and who is able to give their professional opinion on the translation.

We should also focus on the goal of the evaluation. Do you want to get an impression of the quality of the output? Or would you like to know whether using machine translation will increase the efficiency of your translation workflow? Or perhaps both? Clear instructions are required in order to produce the most detailed and specific evaluation possible. If you want to collect as many opinions as possible to make informed decisions on the future of your translation process, you could always use a little help.

Evaluating machine translation output with the MT Evaluation Tool

The CrossLang MT Evaluation tool allows you to rate various types of translation based exactly on these questions. Evaluators can compare a machine translation to a human translation or a post-edited version, or compare machine translation output from different providers. The tool provides you with a controlled environment in which test linguists are presented with a type of task.

An evaluation can consist of several tasks, depending on the ultimate goal. In some cases it might suffice to let users go through a certain amount of sentences and indicate for each one which one they prefer. Other times it might seem useful to let them indicate why exactly they prefer one option over the other (this is especially interesting when custom machine translation engines are being built).

Afterwards, the results of this task can be consolidated into clear statistics, also taking into account the time it took to complete a task. The results from these evaluations can assist you in gaining more insight into your localization workflow and processes, and how these can be improved.

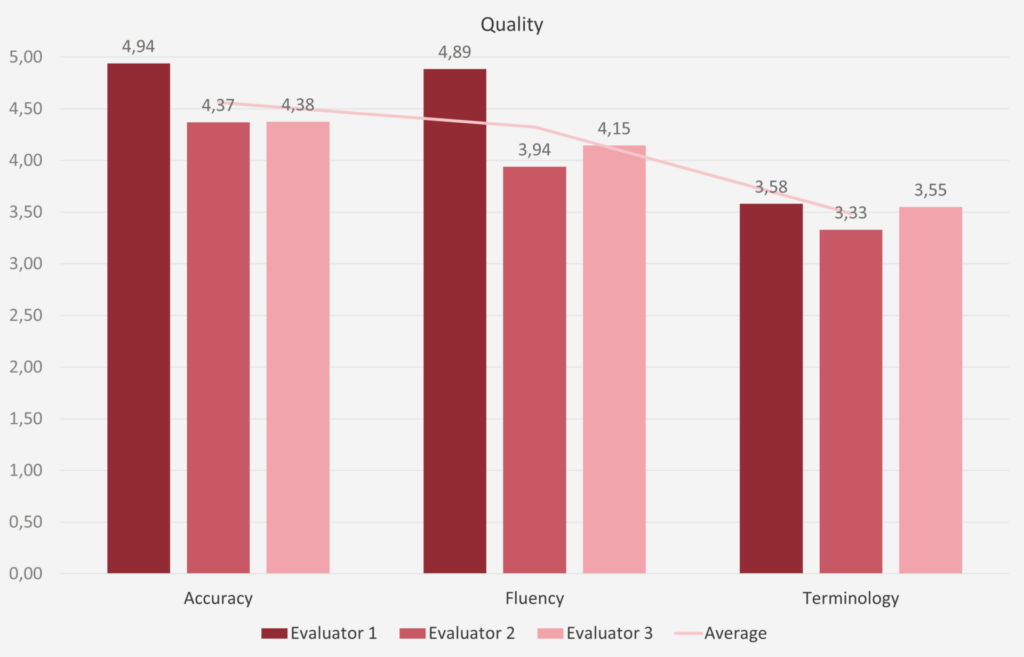

Results of a human evaluation

Depending on the purpose of the evaluation task, various conclusions can be drawn. For example, results can show that, for a specific language pair and topic, one machine translation provider generates better output than another. The next time you want to apply machine translation in your translation process, you will be confident in your choice of provider.

Alternatively, results from the MT Evaluation Tool can indicate how much time you could save by applying a post-editing step in your localization process. Research has indicated that a linguist is able to translate two or three times as many words in a day when post-editing is used, compared to a translation from scratch.

Many companies and translators are still hesitant when it comes to using machine translation but the opportunity to see and evaluate for yourself will give a better understanding of the results and benefits.

Everything comes at a cost

The downside of having evaluations performed by humans is, well, the human factor itself. Opinions on what a ‘good’ translation means exactly can vary strongly from one evaluator to the next. The same goes for the time spent on a task: some people simply work faster than others. And then there is the matter of cost: a human evaluation requires a certain amount of time and money.

However, the benefits of a human evaluation are difficult to ignore as you gain full insight into your translation process, down to the tiniest detail.